Artificial intelligence has long been hailed as a great “equalizer” of creativity, finally putting the ability to create art in all of its myriad forms into the hands of the tech-savvy. Not a creative person? Not an issue.

May 23, 2024:

“The reason we built this tool is to really democratize image generation for a bunch of people who wouldn’t necessarily classify themselves as artists,” said the lead researcher for DALL-E, which turns text prompts into images. Sam Altman, founder of OpenAI, wrote in his book that generative AI will one day account for 95 percent of the work that companies hire creative professionals to do: “All free, instant, and nearly perfect. Images, videos, campaign ideas? No problem.” Or, as another AI startup founder put it: “So much of the world is creatively constipated, and we’re going to make it so that they can poop rainbows.”

But it is a problem for actual artists, and for anybody who cares and thinks deeply about the words, images, and sounds we consume every day. With any promise of disruption comes the reasonable fear that its replacement will be worse, both for the creative professionals who rely on artmaking for their livelihoods and for people who enjoy reading well-written works, who take pleasure in thoughtful visual art, who watch movies not solely to be entertained but because of the surprising, life-affirming, or otherwise meaningful directions a good film might go. Should we take seriously the artistic vision of someone who considers “pooping rainbows” the pinnacle of creativity?

The wrinkle in AI executives’ plot to supplant human creativity is that so far, consumer AI tools are not very good at making art. Generative AI creates content based on recognizing patterns within the data it was trained on, using statistics to determine what the prompter is hoping to get out of it. But if art is more meaningful beyond the images or words that comprise it or the money that it makes, what good is an amalgam of its metadata, divorced from the original context?

Text generators like ChatGPT, image creators like Stable Diffusion, Midjourney, Lensa, and DALL-E, the song-making tool Suno, and text-to-video generators like Runway and Sora, can produce content that looks like human-made writing, music, or visuals by virtue of having been trained on a great many human-made works. Yet any further examination reveals them to be mostly hollow, boring, and disposable. As one former journalist who now works at Meta remarked, “There hasn’t been a single AI-generated creative work that has really stuck with me … it all just glides right past and disappears.”

What happens when and if the AI tools of the future can someday produce novels that people actually want to read, songs that listeners can’t stop blasting, or films that audiences will pay movie theater prices to see? Or, perhaps the better question is: Is that even possible if the owners of these technologies fundamentally misunderstand why people make and enjoy art?

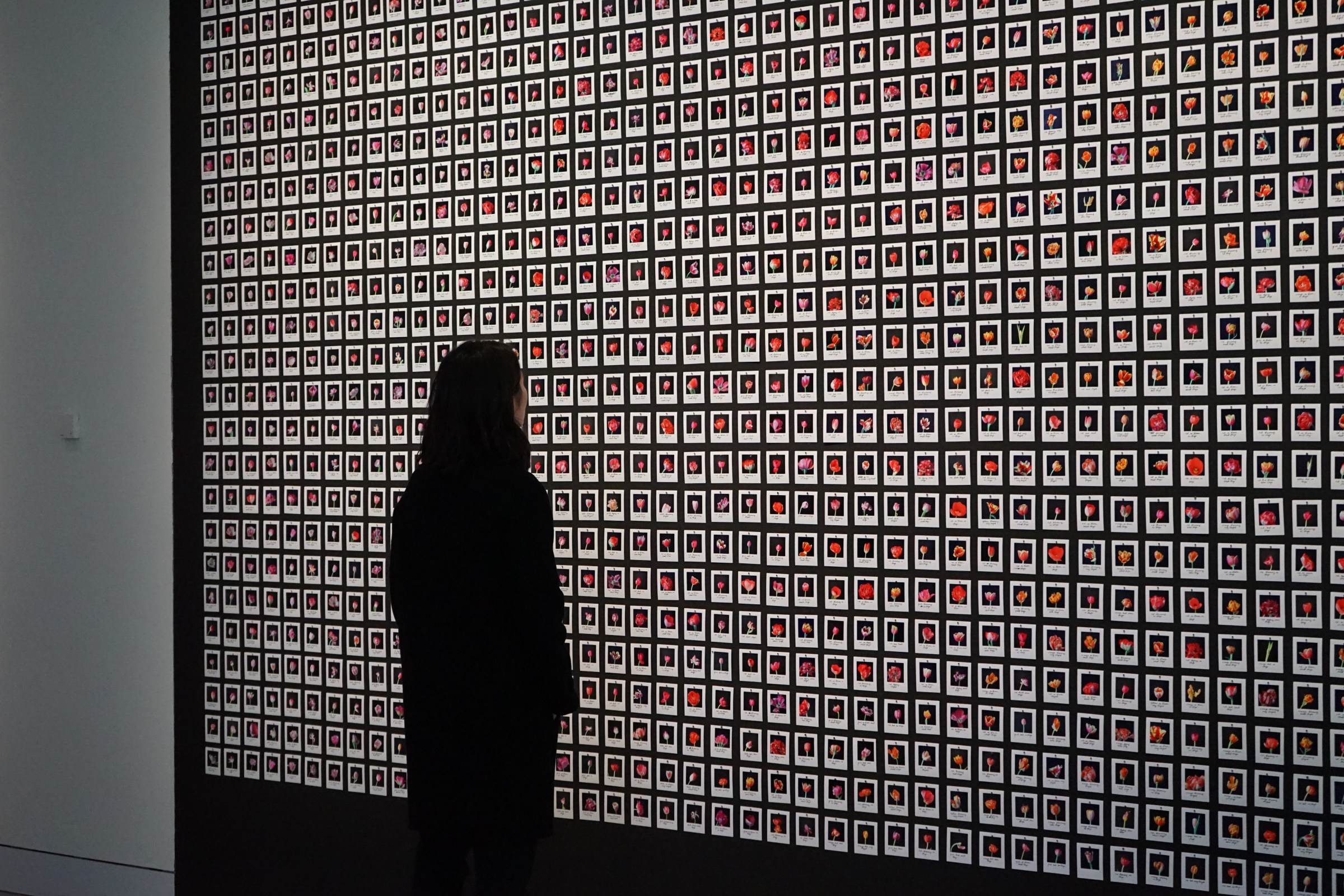

Visual artists have used machine learning for decades, but they’ve often done so in ways that reflect the artist’s process and ideas rather than the machine’s. Anna Ridler is a conceptual artist who uses a type of machine learning called a generative adversarial network, or GAN, that can be trained solely on the images she feeds it.

That isn’t what the current generation of simple text-to-image generators — which are trained on hundreds of millions of images scraped from all over the internet — like Midjourney and DALL-E do. “Conceptually, it’s hard to do interesting things with [them], because [they’re] hidden behind APIs. It’s a black box,” she says of the proprietary (as opposed to open-source) software these companies use that discourages true experimentation.

Instead, the work these generators churn out look uncanny, smooth, and generally off in ways that are both obvious and not: Generative AI’s reliance on data make it famously unable to accurately depict human hands, and as psychologist and AI commentator Gary Marcus noted, it also struggles with statistically improbable prompts like “a horse riding an astronaut,” which still seem exclusively the province of human imagination (even the latest AI models will invariably give you a picture of an astronaut riding a horse). “There’s this weird slickness to the images that will become a temporal marker that ‘this was a mid-2020s image,’” Ridler says. “The more you play with these things, the more you realize how hard it is to actually get something interesting and original out of them.”

What AI is good at doing, however, is flooding the internet with mediocre, instantaneous art. “You know what I realized about AI images in your marketing? It sends out the message that you’ve got no budget. It’s the digital equivalent of wearing an obviously fake Chanel bag. Your whole brand immediately appears feeble and impoverished,” wrote artist Del Walker on X.

It’s the same story with text generators. Last year Neil Clarke, the founder of the sci-fi and fantasy literary magazine Clarkesworld, shut down submissions after ChatGPT-generated works accounted for nearly half of what was submitted. “When this hit us last year, I told people they’re worse than any human author we’ve ever seen. And after one update, they’re equal to the worst authors we’ve seen,” he says. “Being a statistical model, it’s predicting the next most likely word, so it doesn’t really understand what it’s writing. And understanding is somewhat essential to telling a good story.”

Great works of storytelling tend to work not just on one level but on multiple — they contain subtext and meaning that a statistical model likely couldn’t grasp with data alone. Instead, Clarke says, the AI-generated stories were flat and unsophisticated, even if they were grammatically perfect.

“Right now, you could have GPT-4 generate something that looks like a full screenplay: It’d be 120 pages, it would have characters, they would have consistent names throughout and the dialogue would resemble things you might find in a movie,” says John August, a screenwriter on the WGA bargaining committee, which gained huge protections against AI last September. “Would it really make sense? I don’t know. It might be better than the worst screenplay you’ve ever read, but that’s a very low bar to cross. I think we’re quite a ways away from being a thing you’re going to want to read or watch.”

AI is already being used in film in a few ways, sometimes to make it appear as though actors’ mouths match up with dubbed foreign languages, for example, or in creating backdrops and background characters. More controversially, AI has also been used in documentary projects: 2021’s Roadrunner: A Film About Anthony Bourdain used AI to make a fake Bourdain speak three lines, a similar tactic was used in 2022’s The Andy Warhol Diaries. In April, the leaders of the Archival Producers Alliance drafted a proposed list of best practices for AI in journalistic film, including allowing for the use of AI to touch up or restore images, but warning that using generative AI to create new material should be done with careful consideration.

This future is far — although nobody can agree on how long — from the one that AI boosters have preached is just around the corner, one of endless hyper-personalized entertainment with the click of a button. “Imagine being able to request an AI to generate a movie with specific actors, plot, and location, all customized to your personal preferences. Such a scenario would allow individuals to create their own movies from scratch for personal viewing, completely eliminating the need for actors and the entire industry around filming,” teased one AI industry group.

Marvel filmmaker Joe Russo echoed this vision in an interview, positing, “You could walk into your house and save the AI on your streaming platform. ‘Hey, I want a movie starring my photoreal avatar and Marilyn Monroe’s photoreal avatar. I want it to be a romcom because I’ve had a rough day,’ and it renders a very competent story with dialogue that mimics your voice, and suddenly now you have a romcom starring you that’s 90 minutes long.”

It’s certainly possible that the next generation of AI tools makes such a leap that this fantasy could conceivably become a reality. Still, it inevitably begs the question of whether a “very competent” hyper-personalized romcom is what most people want, or will ever want, from the art they consume.

That doesn’t mean AI won’t transform the creative industries

However dystopian this might sound (not least because, as any woman on the internet is well aware, this technology is being used to make nonconsensual sexual images and videos), we actually already have a decent corollary for it. Just as AI is meant to “democratize” artmaking, the creator industry, which was built on the back of social media, was designed to do the same thing: circumvent the traditional gatekeepers of media by “empowering” individuals to produce their own content and in return, offering them a place where their work might actually get seen.

There are clear pros and cons here. While AI is useful in giving emerging creators new tools to make, say, visual and sound effects they might not otherwise have the money or skill to produce, it is equally or perhaps more useful for fraud, in the form of unthinkably enormous amounts of phone scams, deepfakes, and phishing attacks.

Ryan Broderick, who often discusses the cultural impact of AI on his newsletter Garbage Day, points out another comparison between social media and generative AI. “My fear is that we’re hurtling really quickly towards a world where rich people can read the words written by humans and people who can’t afford it read words written by machines,” he tells me. Broderick likens it to what’s already happening on the internet in many parts of the world, where the wealthy can afford subscriptions to newspapers and magazines written by professionals while the working classes consume news on social media, where lowest-common-denominator content is often what gets the most attention.

Crucially, social media may have disrupted media gatekeepers and given more people to platforms to showcase their art, but it didn’t expand the number of creatives able to make a living doing those things — in many ways it did the opposite. The real winners were and continue to be the owners of these platforms, just as the real winners of AI will be the founders who pitch their products to C-suite executives as replacements for human workers.

Because even if AI can’t create good art without a talented human being telling it what to do, that doesn’t mean it doesn’t pose an existential threat to the people working in creative industries. For the last few years, artists have watched in horror as their work has been stolen and used to train AI models, feeling as though they’re being replaced in real time.

“It starts to make you wonder, do I even have any talent if a computer can just mimic me?” said a fiction writer who used the writing AI tool Sudowrite. Young people are reconsidering whether or not to enter artistic fields at all. In an FTC roundtable on generative AI’s impact on creative industries last October, illustrator Steven Zapata said, “The negative market implications of a potential client encountering a freely downloadable AI copycat of us when searching our names online could be devastating to individual careers and our industry as a whole.”

Cory Doctorow, known for both his science fiction and tech criticism, argues that, in any discussion of AI art, the crucial question should be: “How do we minimize the likelihood that an artist somewhere gets $1 less because some tech bro somewhere gets $1 more?”

How to think about the artistic “threat” of AI

Even as we should take seriously the labor implications of AI — not to mention the considerable ethical and environmental effects — Doctorow argues that it’s essential to stop overhyping its capabilities. “In the same way that pretending ‘Facebook advertising is so good that it can brainwash you into QAnon’ is a good way to help Facebook sell ads, the same thing happens when AI salespeople say, ‘I don’t know if you’ve heard my critics, but it turns out I have the most powerful tool ever made, and it’s going to end the planet. Wouldn’t you like me to sell you some of it?’”

This is how AI salespeople view art: as commodities to be bought and sold, not as something to do or enjoy. In a 2010 essay on The Social Network, Zadie Smith made the case that the experience of using Facebook was in fact the experience of existing inside Mark Zuckerberg’s mind. Everything was made just so because it suited him: “Blue, because it turns out Zuckerberg is red-green color-blind … Poking, because that’s what shy boys do to girls they are scared to talk to. Preoccupied with personal trivia, because Mark Zuckerberg thinks the exchange of personal trivia is what ‘friendship’ is,” she writes.

Why should millions (now billions) of people choose to live their lives in this format over any others? Just as we should ask whether using a tool created by a college sophomore preoccupied with control and stoicism is perhaps the best way to connect with our friends, we should also be asking why we should trust AI executives and their supporters to decide anything related to creativity.

One of the more asinine things published last year was Marc Andreesen’s “Techno-Optimist Manifesto,” in which the billionaire venture capitalist whined about the supposed lack of cultural power he and people exactly like him wield in comparison to “ivory tower, know-it-all credentialed expert[s].” These ideological enemies, it is relatively safe to infer, are the sorts of people — ethicists, academics, union leaders — who might concern themselves with the well-being of normal people under his particular view of “progress”: free markets, zero regulation, unlimited investment in technological advancement regardless of what these technologies are actually being used to do.

A slightly funny element of the “Techno-Optimist Manifesto” is how clearly its author’s interest in art fails to extend beyond an average ninth-grader’s familiarity with literature (references include the “hero’s journey,” Orwell, and Harry Potter); in a list of “patron saints of techno-optimism,” Andreesen names a few dozen people, mostly free-market economists, and a single visual artist: Warhol.

On X, a platform run by a different out-of-touch tech billionaire, AI boosters cheer on a world finally rid of human creators, even human beings at all. “This is it. The days of OnlyFans is over,” posted one tech commentator over a video of AI-animated people dancing. “Seems sorta obvious AI will replace the online simp/thot model,” said another. “Cash in on OnlyFans while you can I guess.”

In the same way that people who believe human influencers will be replaced by AI simulacra fail to understand what is compelling about influencers, those who believe AI will somehow “replace Hollywood” or the music and publishing industries betray a lack of curiosity about why we consume art in the first place. Try asking Google’s new AI Overview feature why people love art, for instance, and it will tell you that “watching art can release dopamine.”

People love great art not for the chemicals it releases but because it challenges us, comforts us, confuses us, probes us, attacks us, excites us, inspires us. Because great art is a miracle, because to witness it is to feel the presence of something like God and the human condition, and to remind us that they are perhaps the same thing. It’s no coincidence that AI has widely been compared to a cult; there is almost a religious zeal to its adherents’ beliefs that one day AI will become omnipotent. But if you look at art and all you see is content, or if you look at a picture of a hot girl and all you see are JPGs in the shape of a sexual object, that’s all you’ll get out of it.

People love great art not for the chemicals it releases but because it challenges us, comforts us, confuses us, probes us, attacks us, excites us, inspires us.

Doctorow is willing to stipulate that works created by AI generators might one day be considered an art form, in the same way that sampling went from being looked down upon but is now a common and celebrated practice in music. Media theorist Ignas Kalpokas has written that AI art “has a revelatory quality, making visible the layers of the collective unconscious of today’s societies — that is, data patterns — in a way that is in line with the psychoanalytic capacities that [Walter] Benjamin saw in photography and film.” But the more content AI creates, he argues, the more likely that audiences will experience it “in a state of distraction.”

The future of art and entertainment could very well be individuals asking their personal AIs to feed them music, movies, or books created with a single prompt and the press of a button, although the point at which this sort of entertainment will be good enough to hold our attention feels much farther out of reach. If there is a day where this becomes the norm, the creative industries would, as they have for more than a century, adapt.

“The history of the professional creative industry is competition — TV competing with film competing with radio,” explains Lev Manovich, an AI artist and digital culture theorist. “Maybe [the industry] becomes more about live events, maybe human performance will become even more valuable. If machines can create Hollywood-level media, the industry will have to offer something else. Maybe some people will lose jobs, but then new jobs will be created.”

I wondered what, if anything, could entice someone like Clarke, the editor of the science fiction literary magazine, to actually publish AI-generated fiction right now, with the technology as it exists. His answer articulated every qualm that I and many in the creative industries take with the notion that AI can do the same work as an artist. “I am willing to accept an AI story when an AI decides to write a story of its own free will and picks me as the place that it wants to send it to. It’d be no different if an alien showed up on the planet: I wouldn’t say no. At that point, it’s a new life,” he said. “But that’s a science fiction scenario. It’d be kind of neat if I get to see it during my lifetime, but I’m not gonna hold my breath.”