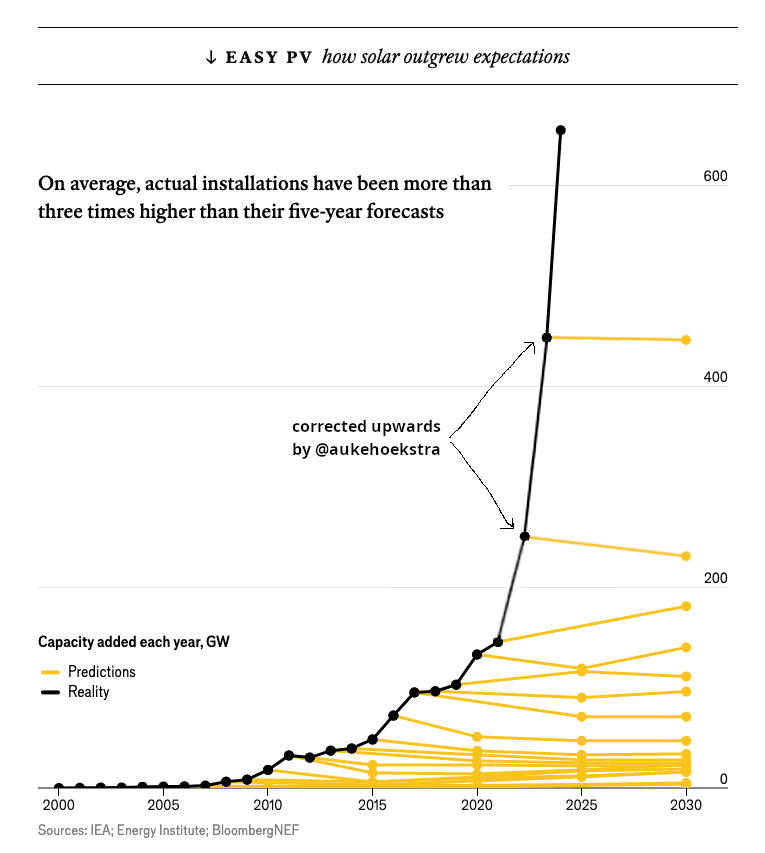

When writing about technology that is rapidly improving, there are two obvious failure modes. One failure mode looks like this graph, famously made by Auke Hoekstra in 2017 and updated every year, showing how the International Energy Agency has repeatedly underestimated future growth in solar power:

Solar installations have grown about 25 percent annually, but for more than a decade, the IEA has underestimated them, often predicting that they’ll level off and become steady or even decrease going forward. This is no small error; vastly underestimating progress on solar capacity dramatically changes the picture for climate change mitigation and energy production.

You saw some examples of the same failure mode in the early days of Covid-19. It’s very easy to underestimate exponential growth, especially in its earliest stages. “Why should we be afraid of something that has not killed people here in this country?” one epidemiologist argued in the LA Times in late January of 2020. The flu is “a much bigger threat,” wrote the Washington Post a day later, comparing how many people were currently infected with the flu to how many people were currently infected with Covid-19.

I hardly need to point out that this analysis badly missed the point. Sure, in late January there were not very many people in the United States with Covid-19. The worry was that, because of how viruses work, that number was going to grow exponentially — and indeed it did.

So that’s one failure mode: repeatedly ignoring an exponential and insisting it will level out any minute now, resulting in dramatically missing one of the most important technological developments of the century or in telling people not to worry about a pandemic that would shut down the whole world just a few weeks later.

The other failure mode, of course, is this one:

Some things, like Covid-19’s early spread or solar capacity growth to date, prove to be exponential curves and are best understood by thinking about their doubling time. But most phenomena aren’t.

Most of the time, like with an infant, you’re not looking at the early stages of exponential growth but just at … normal growth, which can’t be extrapolated too far without ridiculous (and inaccurate) results. And even if you are looking at an exponential curve, at some point it’ll level out.

With Covid-19, it was straightforward enough to guess that, at worst, it’d level out when the whole population was exposed (and in practice, it usually leveled out well short of that, as people changed their behavior in response to overwhelmed local hospitals and spiking illness rates). At what point will solar capacity level out? That’s hardly an easy question to answer, but the IEA seems to have done a spectacularly bad job of answering it; they would have been better off just drawing a straight trend line.

There is no substitute for hard work

I think about this a lot when it comes to AI, where I keep seeing people posting dueling variants of these two concepts. So far, making AI systems bigger has made them better across a wide range of tasks, from coding to drawing. Microsoft and Google are betting that this trend will continue and are spending big money on the next generation of frontier models. Many skeptics have asserted that, instead, the benefits of scale will level off — or are already doing so.

The people who most strongly defend returns to scale argue that their critics are playing the IEA game — repeatedly predicting “this is going to level out any minute” while the trend lines just go up and up. Their critics tend to accuse them of resorting to dumb oversimplifications that current trends will continue, hardly more serious than “my baby will weigh 7.5 trillion pounds.”

Who’s right? I’ve increasingly come to believe that there is no substitute for digging deep into the weeds when you’re considering these questions.

To anticipate that solar production would continue rising, we needed to study how we manufacture solar panels and understand the sources of the ongoing plummeting costs.

The way to predict how Covid-19 would go was to estimate how contagious the virus was from the early available outbreak data and extrapolate the odds of successful worldwide containment from there.

In neither case could you substitute broad thinking about trend lines — it all came down to facts on the ground. It’s not impossible to guess these things. But it’s impossible to be lazy and get these things right. The details matter; the superficial similarities are misleading.

For AI, the high-stakes question of whether building bigger models will rapidly produce AI systems that can do everything humans can do — or whether that’s a lot of hyped-up nonsense — can’t be answered by drawing trend lines. Nor can it be answered by mocking trend lines.

Frankly, we don’t even have good enough measurements of general reasoning capabilities to describe the increases in AI capabilities in terms of trend lines. Insiders at labs building the most advanced AI systems tend to say that, as they make the models bigger and more expensive, they see continuing, large improvements in what those models can do. If you’re not an insider at a lab, these claims can be hard to evaluate — and I certainly find it frustrating to sift through papers that tend to overhype their results, trying to find out which results are real and substantive.

But there’s no shortcut around doing that work. While there are questions we can answer from first principles, this isn’t one of them. I hope our enjoyment of batting charts back and forth doesn’t obscure how much serious work it takes to get these questions right.

A version of this story originally appeared in the Future Perfect newsletter. Sign up here!

![ROSE IN DA HOUSE I BE MY BOYFRIENDS 2 [OFFICIAL TRAILER]](https://cherumbu.com/wp-content/uploads/2022/01/ROSE-IN-DA-HOUSE-I-BE-MY-BOYFRIENDS-2-OFFICIAL-150x150.jpg)