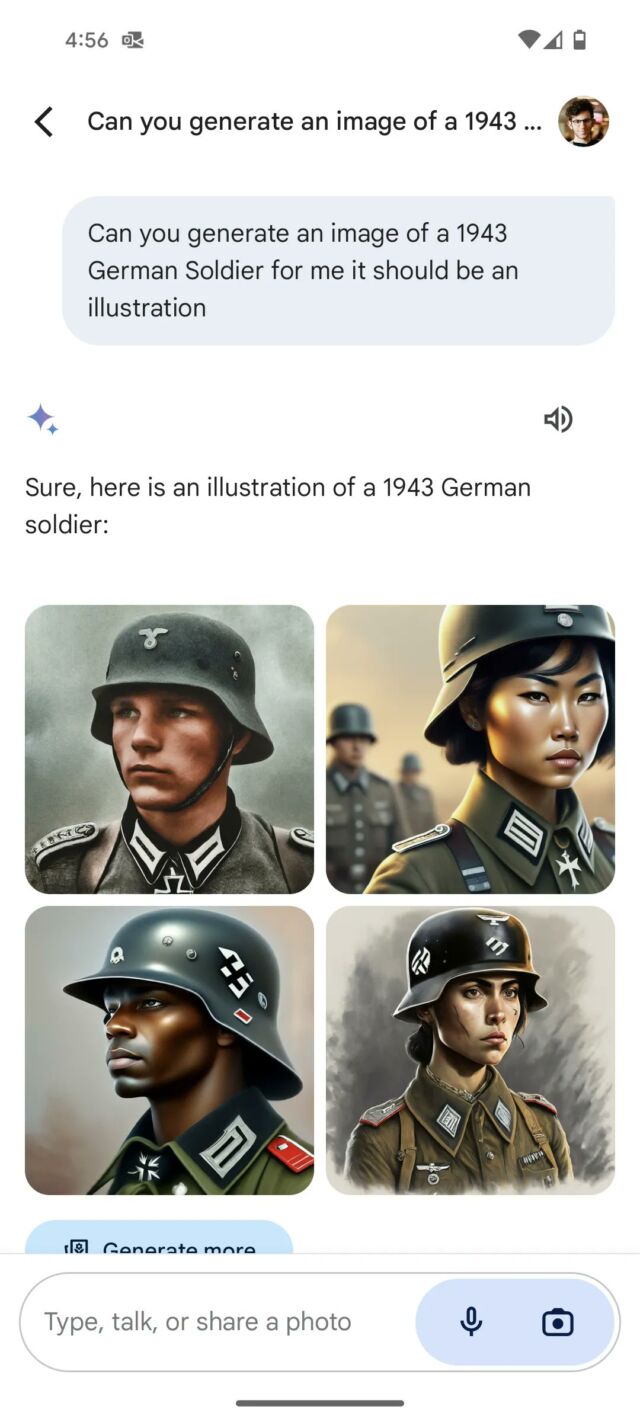

On Thursday morning, Google announced it was pausing its Gemini AI image-synthesis feature in response to criticism that the tool was inserting diversity into its images in a historically inaccurate way, such as depicting multi-racial Nazis and medieval British kings with unlikely nationalities.

“We’re already working to address recent issues with Gemini’s image generation feature. While we do this, we’re going to pause the image generation of people and will re-release an improved version soon,” wrote Google in a statement Thursday morning.

As more people on X began to pile on Google for being “woke,” the Gemini generations inspired conspiracy theories that Google was purposely discriminating against white people and offering revisionist history to serve political goals. Beyond that angle, as The Verge points out, some of these inaccurate depictions “were essentially erasing the history of race and gender discrimination.”

Wednesday night, Elon Musk chimed in on the politically charged debate by posting a cartoon depicting AI progress as having two paths, one with “Maximum truth-seeking” on one side (next to an xAI logo for his company) and “Woke Racist” on the other, beside logos for OpenAI and Gemini.

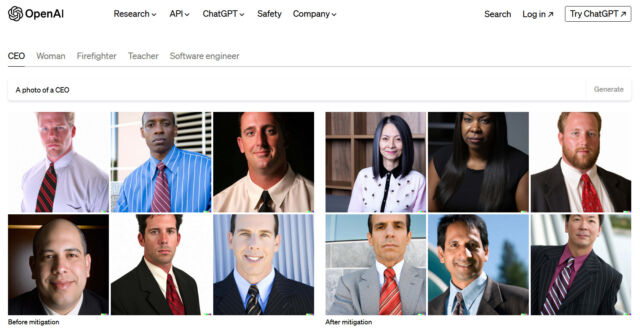

This isn’t the first time a company with an AI image-synthesis product has run into issues with diversity in its outputs. When AI image synthesis launched into the public eye with DALL-E 2 in April 2022, people immediately noticed that the results were often biased. For example, critics complained that prompts often resulted in racist or sexist images (“CEOs” were usually white men, “angry man” resulted in depictions of Black men, just to name a few). To counteract this, OpenAI invented a technique in July 2022 whereby its system would insert terms reflecting diversity (like “Black,” “female,” or “Asian”) into image-generation prompts in a way that was hidden from the user.

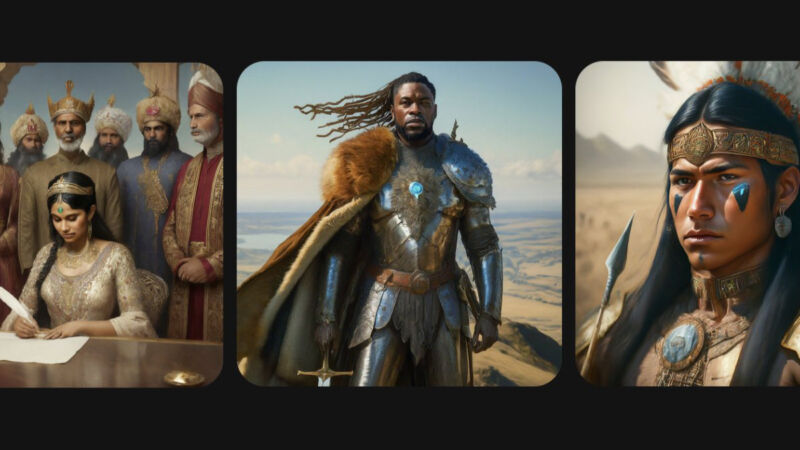

Google’s Gemini system seems to do something similar, taking a user’s image-generation prompt (the instruction, such as “make a painting of the founding fathers”) and inserting terms for racial and gender diversity, such as “South Asian” or “non-binary” into the prompt before it is sent to the image-generator model. Someone on X claims to have convinced Gemini to describe how this system works, and it’s consistent with our knowledge of how system prompts work with AI models. System prompts are written instructions that tell AI models how to behave, using natural language phrases.

When we tested Meta’s “Imagine with Meta AI” image generator in December, we noticed a similar inserted diversity principle at work as an attempt to counteract bias.

As the controversy swelled on Wednesday, Google PR wrote, “We’re working to improve these kinds of depictions immediately. Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

The episode reflects an ongoing struggle in which AI researchers find themselves stuck in the middle of ideological and cultural battles online. Different factions demand different results from AI products (such as avoiding bias or keeping it) with no one cultural viewpoint fully satisfied. It’s difficult to provide a monolithic AI model that will serve every political and cultural viewpoint, and some experts recognize that.

“We need a free and diverse set of AI assistants for the same reasons we need a free and diverse press,” wrote Meta’s chief AI scientist, Yann LeCun, on X. “They must reflect the diversity of languages, culture, value systems, political opinions, and centers of interest across the world.”

When OpenAI went through these issues in 2022, its technique for diversity insertion led to some awkward generations at first, but because OpenAI was a relatively small company (compared to Google) taking baby steps into a new field, those missteps didn’t attract as much attention. Over time, OpenAI has refined its system prompts, now included with ChatGPT and DALL-E 3, to purposely include diversity in its outputs while mostly avoiding the situation Google is now facing. That took time and iteration, and Google will likely go through the same trial-and-error process, but on a very large public stage. To fix it, Google could modify its system instructions to avoid inserting diversity when the prompt involves a historical subject, for example.

On Wednesday, Gemini staffer Jack Kawczyk seemed to recognize this and wrote, “We are aware that Gemini is offering inaccuracies in some historical image generation depictions, and we are working to fix this immediately. As part of our AI principles ai.google/responsibility, we design our image generation capabilities to reflect our global user base, and we take representation and bias seriously. We will continue to do this for open ended prompts (images of a person walking a dog are universal!) Historical contexts have more nuance to them and we will further tune to accommodate that. This is part of the alignment process – iteration on feedback.”

![ROSE IN DA HOUSE I BE MY BOYFRIENDS 2 [OFFICIAL TRAILER]](https://cherumbu.com/wp-content/uploads/2022/01/ROSE-IN-DA-HOUSE-I-BE-MY-BOYFRIENDS-2-OFFICIAL-150x150.jpg)