One week after its last major AI announcement, Google appears to have upstaged itself. Last Thursday, Google launched Gemini Ultra 1.0, which supposedly represented the best AI language model Google could muster—available as part of the renamed “Gemini” AI assistant (formerly Bard). Today, Google announced Gemini Pro 1.5, which it says “achieves comparable quality to 1.0 Ultra, while using less compute.”

Congratulations, Google, you’ve done it. You’ve undercut your own premiere AI product. While Ultra 1.0 is possibly still better than Pro 1.5 (what even are we saying here), Ultra was presented as a key selling point of its “Gemini Advanced” tier of its Google One subscription service. And now it’s looking a lot less advanced than seven days ago. All this is on top of the confusing name-shuffling Google has been doing recently. (Just to be clear—although it’s not really clarifying at all—the free version of Bard/Gemini currently uses the Pro 1.0 model. Got it?)

Google claims that Gemini 1.5 represents a new generation of LLMs that “delivers a breakthrough in long-context understanding,” and that it can process up to 1 million tokens, “achieving the longest context window of any large-scale foundation model yet.” Tokens are fragments of a word. The first part of the claim about “understanding” is contentious and subjective, but the second part is probably correct. OpenAI’s GPT-4 Turbo can reportedly handle 128,000 tokens in some circumstances, and 1 million is quite a bit more—about 700,000 words. A larger context window allows for processing longer documents and having longer conversations. (The Gemini 1.0 model family handles 32,000 tokens max.)

But any technical breakthroughs are almost beside the point. What should we make of a company that just trumpeted to the world about its AI supremacy last week, only to partially supersede that a week later? Is it a testament to the rapid rate of AI technical progress in Google’s labs, a sign that red tape was holding back Ultra 1.0 for too long, or merely a sign of poor coordination between research and marketing? We honestly don’t know.

So back to Gemini 1.5. What is it, really, and how will it be available? Google implies that like 1.0 (which had Nano, Pro, and Ultra flavors), it will be available in multiple sizes. Right now, Pro 1.5 is the only model Google is unveiling. Google says that 1.5 uses a new mixture-of-experts (MoE) architecture, which means the system selectively activates different “experts” or specialized sub-models within a larger neural network for specific tasks based on the input data.

Google says that Gemini 1.5 can perform “complex reasoning about vast amounts of information,” and gives an example of analyzing a 402-page transcript of Apollo 11’s mission to the Moon. It’s impressive to process documents that large, but the model, like every large language model, is highly likely to confabulate interpretations across large contexts. We wouldn’t trust it to soundly analyze 1 million tokens without mistakes, so that’s putting a lot of faith into poorly understood LLM hands.

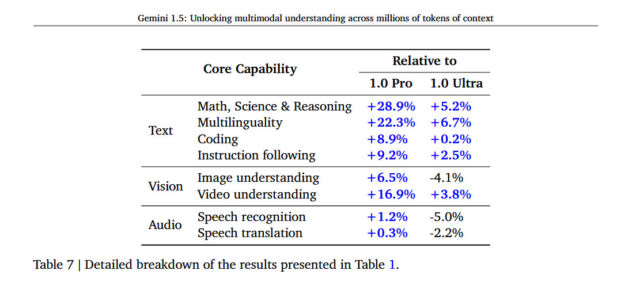

For those interested in diving into technical details, Google has released a technical report on Gemini 1.5 that appears to show Gemini performing favorably versus GPT-4 Turbo on various tasks, but it’s also important to note that the selection and interpretation of those benchmarks can be subjective. The report does give some numbers on how much better 1.5 is compared to 1.0, saying it’s 28.9 percent better than 1.0 Pro at “Math, Science & Reasoning” and 5.2 percent better at those subjects than 1.0 Ultra.

But for now, we’re still kind of shocked that Google would launch this particular model at this particular moment in time. Is it trying to get ahead of something that it knows might be just around the corner, like OpenAI’s unreleased GPT-5, for instance? We’ll keep digging and let you know what we find.

Google says that a limited preview of 1.5 Pro is available now for developers via AI Studio and Vertex AI with a 128,000 token context window, scaling up to 1 million tokens later. Gemini 1.5 apparently has not come to the Gemini chatbot (formerly Bard) yet.

![ROSE IN DA HOUSE I BE MY BOYFRIENDS 2 [OFFICIAL TRAILER]](https://cherumbu.com/wp-content/uploads/2022/01/ROSE-IN-DA-HOUSE-I-BE-MY-BOYFRIENDS-2-OFFICIAL-150x150.jpg)